→ Mediastudies

Technical media are not docile and neutral mediators; rather they are self-configuring, their conditions determine

the way human affects and meanings are generated and channeled in them.

Two aspects are intrinsic to the universal medium of the computer:

Mediamaterialism names the insight that computers, as materialized practice and knowledge, are autonomous agents. They are not seen as extensions of man, but in their historical manifestations form a machinic phylum, in which technology and mathematics/logic mutually influence each other.

Processtheories capture the temporal moment of computers. Computer systems show their effect only in the temporal execution, switched off they are only dead matter. This processuality shows own modes of time and realizes modes of perception and action beyond what is humanly possible. This independent non-human realm is in turn linked back to human perceptions and actions, they are influenced by it.

Two aspects are intrinsic to the universal medium of the computer:

Mediamaterialism names the insight that computers, as materialized practice and knowledge, are autonomous agents. They are not seen as extensions of man, but in their historical manifestations form a machinic phylum, in which technology and mathematics/logic mutually influence each other.

Processtheories capture the temporal moment of computers. Computer systems show their effect only in the temporal execution, switched off they are only dead matter. This processuality shows own modes of time and realizes modes of perception and action beyond what is humanly possible. This independent non-human realm is in turn linked back to human perceptions and actions, they are influenced by it.

Mediamaterialism

Analog 2.0 - When it comes to simulating a human brain in the not-too-distant future it will likely run on

Memristors.

Built into neuromorphic circuits, computer architectures will emerge that have very little in common with current static computer hardware.

These processors are no longer programmed but optimize themselves analogously to evolutionary processes. The hardware becomes software.

The signs of such a future of computers is current to read the promises of Big Data, Intelligent Assistants (Siri, Cortana) as well as autonomous driving. Behind these different ventures is a common concern to identify patterns in big data, as close to real time as possible. The focus here is less on the precision of data analysis is less important than recognizing fuzzy or noisy trends and shapes. Accordingly, digital precision is not required. These projects suggest to resort to analog computing methods, which are far ahead of the digital in terms of speed.

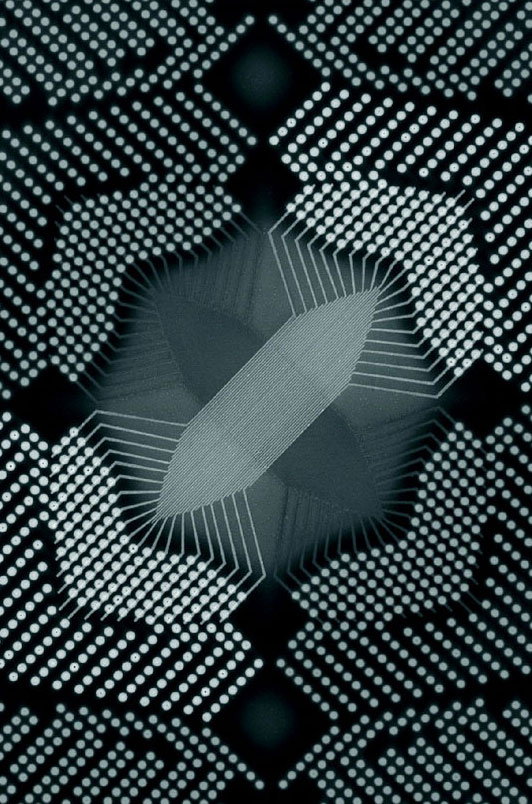

As the fundamental fourth electrical component, along with the resistor, capacitor and coil, the memristor differs from these in that, due to its inherent hysteresis, it memorizes its past state. As a variable non-volatile resistor, it is used in hardware neural networks as a memory for the continuous connection weights. On the other hand, when operated digitally, the memristor suggests a different logic. The conjunction used in CMOS architectures is replaced by the implication, which can be seen in the sense of a conditional as well as a temporal link, and thus embodies Günther's polycontextural logic, a temporal and context-dependent logic.

According to Michael Conrad such architectures are urgently necessary, in order not to continue to pay the 'price of programmability', at the expense of programmability polynomial growth in the interaction of the computing units. Rather, all computational units should be linkable with each other and mutually evolutionary optimization. This approach would say goodbye to the Von Neumann architecture, with its separation of memory and computational units and the associated bottleneck problem, the latency that occurs when data is loaded from memory into the processor. There is then talk of memory computers, computing elements used as both memory and computational modules, operating in parallel.

By extension, this refers to a machine unconscious, because these sub-symbolic analog computational processes run below the discrete digital symbols, and it is impossible in retrospect to reconstruct the state changes.

Built into neuromorphic circuits, computer architectures will emerge that have very little in common with current static computer hardware.

These processors are no longer programmed but optimize themselves analogously to evolutionary processes. The hardware becomes software.

The signs of such a future of computers is current to read the promises of Big Data, Intelligent Assistants (Siri, Cortana) as well as autonomous driving. Behind these different ventures is a common concern to identify patterns in big data, as close to real time as possible. The focus here is less on the precision of data analysis is less important than recognizing fuzzy or noisy trends and shapes. Accordingly, digital precision is not required. These projects suggest to resort to analog computing methods, which are far ahead of the digital in terms of speed.

As the fundamental fourth electrical component, along with the resistor, capacitor and coil, the memristor differs from these in that, due to its inherent hysteresis, it memorizes its past state. As a variable non-volatile resistor, it is used in hardware neural networks as a memory for the continuous connection weights. On the other hand, when operated digitally, the memristor suggests a different logic. The conjunction used in CMOS architectures is replaced by the implication, which can be seen in the sense of a conditional as well as a temporal link, and thus embodies Günther's polycontextural logic, a temporal and context-dependent logic.

According to Michael Conrad such architectures are urgently necessary, in order not to continue to pay the 'price of programmability', at the expense of programmability polynomial growth in the interaction of the computing units. Rather, all computational units should be linkable with each other and mutually evolutionary optimization. This approach would say goodbye to the Von Neumann architecture, with its separation of memory and computational units and the associated bottleneck problem, the latency that occurs when data is loaded from memory into the processor. There is then talk of memory computers, computing elements used as both memory and computational modules, operating in parallel.

By extension, this refers to a machine unconscious, because these sub-symbolic analog computational processes run below the discrete digital symbols, and it is impossible in retrospect to reconstruct the state changes.

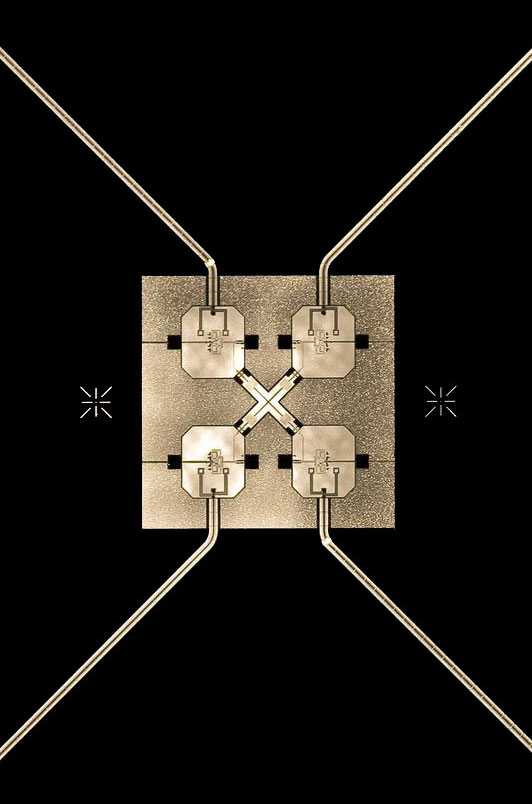

Quantum computers - Parallel universes, spooky remote action and teleportation are ideas that seem to become reality with quantum physics.

Fundamental to this is the phenomenon of quantum entanglement. Quantum objects, once entangled, continue to relate to each other, even after spatial separation.

Events occur that cannot be understood with everyday consciousness: Retrocausality, non-locality, and superpositions, but which can be calculated with.

What is different about these computational processes is that many computations are performed simultaneously, in other words, the quantum computer is in a superposed state and only with a measurement the state collapses to an unambiguous result. The fact that this result can change afterwards and that the quantum computer does not have to be in one exact place, but can exist in several at the same time, has nothing more to do with the common view of congret existing things.

Thought further, these computational concepts, still experimental at the moment, force consequences in other fields, such as neurobiology with its implications for human consciousness. All computational concepts cherish an intimate relationship with concepts of the functioning of the human brain.

If thoughts resemble quantum phenomena, are they then superimposed multivalued entities that are willfully reduced to one value, transferred from a diffuse unconscious to a congruent conscious thought? If thoughts are correlated states, are they globally present throughout the brain, or even distributed among multiple brains, can several people think the same thought simultaneously? Are moods/emotions in the air, or are these effects of the quantum field?

What is different about these computational processes is that many computations are performed simultaneously, in other words, the quantum computer is in a superposed state and only with a measurement the state collapses to an unambiguous result. The fact that this result can change afterwards and that the quantum computer does not have to be in one exact place, but can exist in several at the same time, has nothing more to do with the common view of congret existing things.

Thought further, these computational concepts, still experimental at the moment, force consequences in other fields, such as neurobiology with its implications for human consciousness. All computational concepts cherish an intimate relationship with concepts of the functioning of the human brain.

If thoughts resemble quantum phenomena, are they then superimposed multivalued entities that are willfully reduced to one value, transferred from a diffuse unconscious to a congruent conscious thought? If thoughts are correlated states, are they globally present throughout the brain, or even distributed among multiple brains, can several people think the same thought simultaneously? Are moods/emotions in the air, or are these effects of the quantum field?

Text & Video:

→ Das Feld als nicht-lokaler Speicher.

→ 10.000C. Zur Temporalität der Quantenverschränkung.

Workshop of the Chair of Media Theories (Humboldt-Universität zu Berlin) in cooperation with the Institute for Experimental Design and Media Cultures (Hochschule für Gestaltung und Kunst, Basel): → Zeitigungen von Medien. Epistemologische, gestalterische und experimentelle Alternativen zur (bisherigen) Mediengeschichtsschreibung.«

→ Das Feld als nicht-lokaler Speicher.

→ 10.000C. Zur Temporalität der Quantenverschränkung.

Workshop of the Chair of Media Theories (Humboldt-Universität zu Berlin) in cooperation with the Institute for Experimental Design and Media Cultures (Hochschule für Gestaltung und Kunst, Basel): → Zeitigungen von Medien. Epistemologische, gestalterische und experimentelle Alternativen zur (bisherigen) Mediengeschichtsschreibung.«

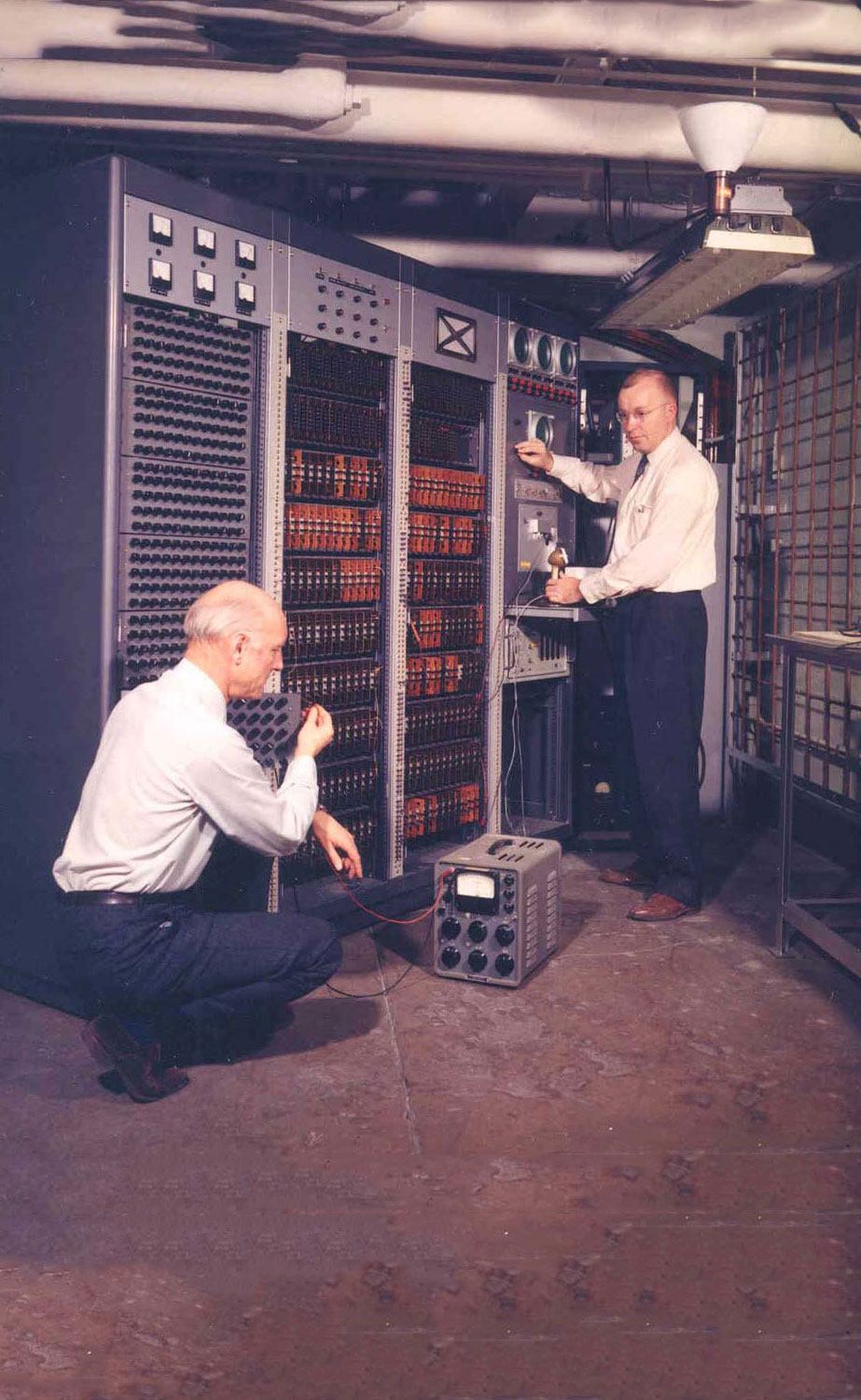

Non-trivial Machines - Since the advent of cybernetics in the 1940s no distinction has been made between living beings and machines,

when it comes to describing information-processing in them.

Living beings/machines are regarded as organizationally closed systems that do not communicate with their environment but form internal representations of the external world based on internal computational processes.

The circular flow of signals in cybernetic systems, the matching of their 'sense data' with the location of their 'limbs', are processes in which a state reproduces itself, in modern terms it is recursivity.

Ideally, the system strives for a state of equilibrium (homeostasis). Due to environmental influences, the system may be forced to modify its desired state, which may cause the transition from a trivial to a non-trivial machine. This is not a causal stimulus-response scheme. Based on its past experience plus a random value, the system will select a new state and try to maintain it in turn.

Non-trivial machines form the basis of neural networks, the paradigm of intelligent self-optimizing systems.

Living beings/machines are regarded as organizationally closed systems that do not communicate with their environment but form internal representations of the external world based on internal computational processes.

The circular flow of signals in cybernetic systems, the matching of their 'sense data' with the location of their 'limbs', are processes in which a state reproduces itself, in modern terms it is recursivity.

Ideally, the system strives for a state of equilibrium (homeostasis). Due to environmental influences, the system may be forced to modify its desired state, which may cause the transition from a trivial to a non-trivial machine. This is not a causal stimulus-response scheme. Based on its past experience plus a random value, the system will select a new state and try to maintain it in turn.

Non-trivial machines form the basis of neural networks, the paradigm of intelligent self-optimizing systems.

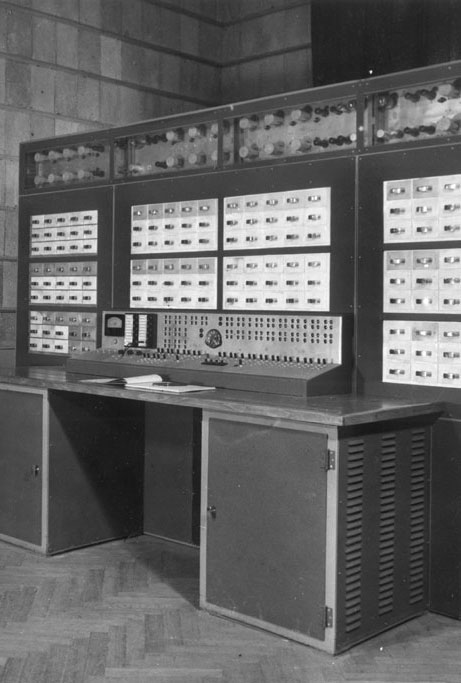

SETUN - Are all statements reducible to binary decisions, i.e. yes or no, as it takes place in digital computers?

What about with future events? Whether a naval battle will take place tomorrow or not cannot be decided today.

Logicians think of the value of undecidable statements as a boundary between yes and no, a value that belongs to neither yes nor no. Rather, it represents a third value determined as undecidable or infinite. Such values, however, cannot be represented in digital binary computers.

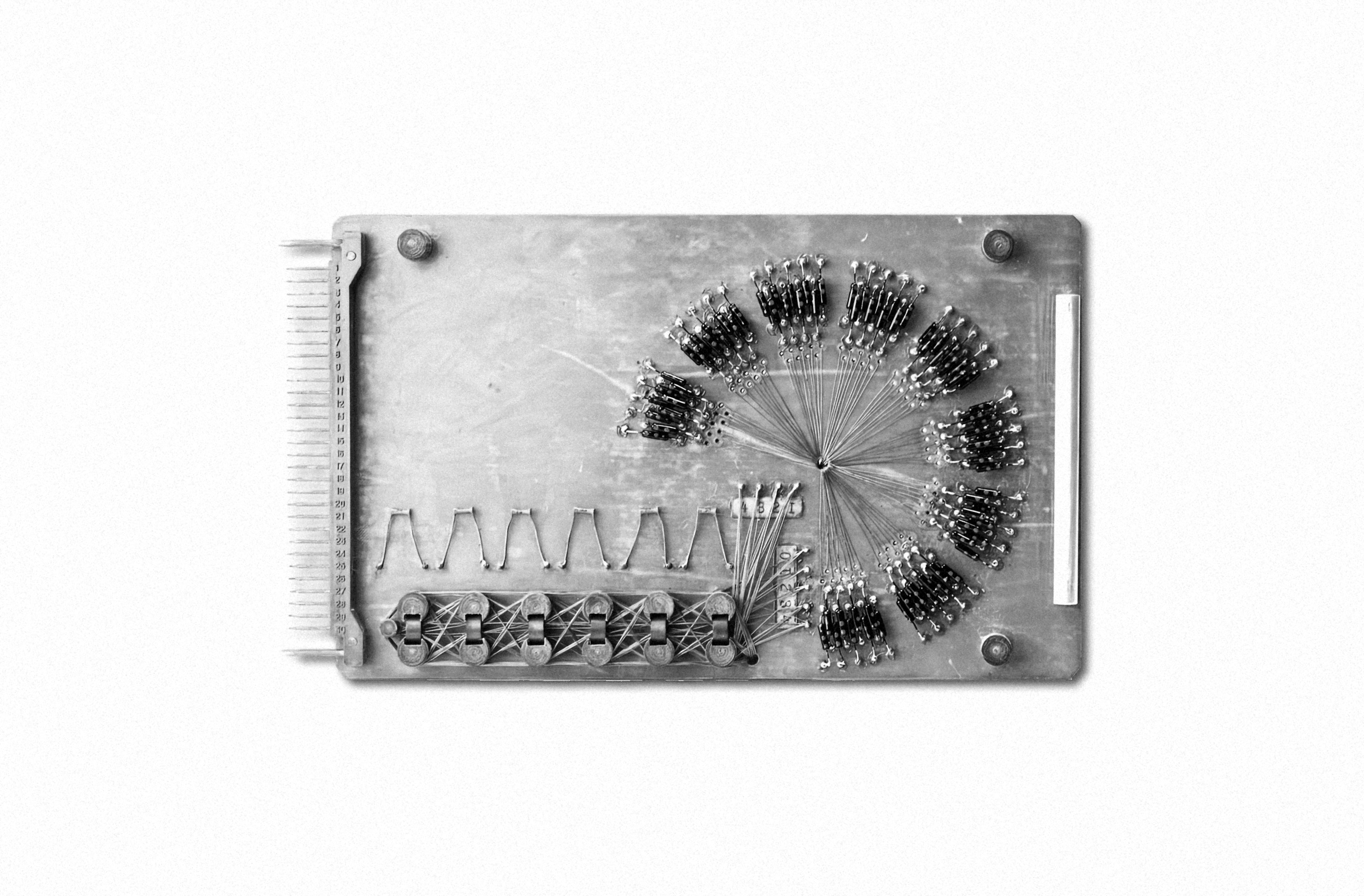

The only trivalent computer ever built is considered to be the Soviet ternary computer SETUN, realized in the 1960s. It worked with a balanced ternary artithmetic, with the values -1, 0, 1. Ternary switching elements (flip-flap-flop) as well as ternary arithmetic operations (tritwise operators) were developed.

The fact that the computer is named after the river of the same name in Moscow can be seen as a metaphor of the machine's mode of operation. By the realization of the alternating tritwise operations of the computing unit by means of two-phase alternating current the image of interfering wave movements of water suggests itself.

In order to establish another simile, recourse is made to a well-known metaphor of Lacan. For Lacan, the cybernetic door symbolizes binary logic: the door can be either open or closed, like the electrical circuit in a computer. Under ternary logic, the door can be opened in either direction, it is more like a swinging door, which means that it can be opened in both directions of passage without operating a closing mechanism, as well as a reverberation or resonance. This door is if then only in the border area closed or open.

What about with future events? Whether a naval battle will take place tomorrow or not cannot be decided today.

Logicians think of the value of undecidable statements as a boundary between yes and no, a value that belongs to neither yes nor no. Rather, it represents a third value determined as undecidable or infinite. Such values, however, cannot be represented in digital binary computers.

The only trivalent computer ever built is considered to be the Soviet ternary computer SETUN, realized in the 1960s. It worked with a balanced ternary artithmetic, with the values -1, 0, 1. Ternary switching elements (flip-flap-flop) as well as ternary arithmetic operations (tritwise operators) were developed.

The fact that the computer is named after the river of the same name in Moscow can be seen as a metaphor of the machine's mode of operation. By the realization of the alternating tritwise operations of the computing unit by means of two-phase alternating current the image of interfering wave movements of water suggests itself.

In order to establish another simile, recourse is made to a well-known metaphor of Lacan. For Lacan, the cybernetic door symbolizes binary logic: the door can be either open or closed, like the electrical circuit in a computer. Under ternary logic, the door can be opened in either direction, it is more like a swinging door, which means that it can be opened in both directions of passage without operating a closing mechanism, as well as a reverberation or resonance. This door is if then only in the border area closed or open.

Processtheories

Process philosophy - In everyday experience, time is perceived as a linear flow, events stringing together successively.

This perception of time is radically challenged by computer systems. Iterations, recursions and loops are control structures in program routines, which allow a conditional and and branched flow of software.

They are characterized by differences and repetitions and update a cyclic conception of time.

To what extent these temporalities, which are alien to the human perception of time, can be comprehended by humans in computers is the subject of this research area. Three moments in particular are model-forming: time is discrete, the latencies between the individual time moments are extremely short, and the moments of time are recursively related to each other.

This tense has its origin in cybernetic modeling. It refers to the control loop that keeps a control system within its operational range, such as a thermostat controls the desired temperature. This concept finds its historical antecedents in the process philosophy of Whitehead and Hegel.

The moment of the feedback loop is evident in Hegel's dialectical thinking, that it resolves a contradiction in the reconciliation of actual and desired values. The critical aspect at the contradiction is that two antagonistic positions call for a revision, which cannot be resolved formally-logically, but only in a next reflexive temporal step and is replaced by another position.

Whitehead's model of time is similarly recursive, but operates on an ontologically different level. Whitehead developed process thinking that atomizes processes, breaking them down into microscopic events, which closely resembles the mircotemporality of computer-based systems. On a multiplicity of temporal as well as spatial scales recursively nested developments run off.

This perception of time is radically challenged by computer systems. Iterations, recursions and loops are control structures in program routines, which allow a conditional and and branched flow of software.

They are characterized by differences and repetitions and update a cyclic conception of time.

To what extent these temporalities, which are alien to the human perception of time, can be comprehended by humans in computers is the subject of this research area. Three moments in particular are model-forming: time is discrete, the latencies between the individual time moments are extremely short, and the moments of time are recursively related to each other.

This tense has its origin in cybernetic modeling. It refers to the control loop that keeps a control system within its operational range, such as a thermostat controls the desired temperature. This concept finds its historical antecedents in the process philosophy of Whitehead and Hegel.

The moment of the feedback loop is evident in Hegel's dialectical thinking, that it resolves a contradiction in the reconciliation of actual and desired values. The critical aspect at the contradiction is that two antagonistic positions call for a revision, which cannot be resolved formally-logically, but only in a next reflexive temporal step and is replaced by another position.

Whitehead's model of time is similarly recursive, but operates on an ontologically different level. Whitehead developed process thinking that atomizes processes, breaking them down into microscopic events, which closely resembles the mircotemporality of computer-based systems. On a multiplicity of temporal as well as spatial scales recursively nested developments run off.

Texts:

→ Rekursive Reflexionen – Zur Protokybernetik bei Hegel und Whitehead.

→ Hegels Idee des Lebens.

→ Whiteheads wirkliche Einzelwesen.

→ Rekursive Reflexionen – Zur Protokybernetik bei Hegel und Whitehead.

→ Hegels Idee des Lebens.

→ Whiteheads wirkliche Einzelwesen.

Field theory - The probably most common form of the description of complex systems is for quite some time the network.

That networks, conditioned by their meshes, cannot grasp everything is clear from Latour's conception of plasma, which fills these voids.

Plasma, as an ionized spatial domain, provides potentials that are captured by or through media.

Mobile devices, smartdust, Internet of Things, generally speaking an omnipresent sensing and computing capacity constitutes a field-like infrastructure.

In networks, only existing actors can be linked together; the construction of these is not part of network theory. The realms outside and below of the existing network instances are captured by the concept of plasma. This unformatted background contains potential new linkage possibilities.

An approach using the physical concept of the field should reveal this primordial ground of relationships and networks. As theoretical heir of the ether the field relieves the all-pervading pervasive primordial matter from tangibility by means of field equations: waves propagate and inform space. The status of objects changes: they are no longer considered as constant objects situated in space but are temporary and local effects of the field, of an informed and structured space.

Following Mark Hansen's atmospheric concept of media, this paper attempts to grasp congruent media-technical operations by means of the field concept. Devices (PC, handheld etc.) appear here only as interfaces to a distributed measuring and computing architecture. Due to the multitude of distributed media operations the medium is spatially dispersed, no longer locally limited.

Mobile devices, smartdust, Internet of Things, generally speaking an omnipresent sensing and computing capacity constitutes a field-like infrastructure.

In networks, only existing actors can be linked together; the construction of these is not part of network theory. The realms outside and below of the existing network instances are captured by the concept of plasma. This unformatted background contains potential new linkage possibilities.

An approach using the physical concept of the field should reveal this primordial ground of relationships and networks. As theoretical heir of the ether the field relieves the all-pervading pervasive primordial matter from tangibility by means of field equations: waves propagate and inform space. The status of objects changes: they are no longer considered as constant objects situated in space but are temporary and local effects of the field, of an informed and structured space.

Following Mark Hansen's atmospheric concept of media, this paper attempts to grasp congruent media-technical operations by means of the field concept. Devices (PC, handheld etc.) appear here only as interfaces to a distributed measuring and computing architecture. Due to the multitude of distributed media operations the medium is spatially dispersed, no longer locally limited.

Texts:

→ Der feldtheoretische Hintergrund von Kollektivierungsprozessen.

→ Embedded Agents – Zur Wir-Intentionalität im Group Agency Konzept.

→ Der feldtheoretische Hintergrund von Kollektivierungsprozessen.

→ Embedded Agents – Zur Wir-Intentionalität im Group Agency Konzept.

Unit Operations - Does the world consist of objects, systems or processes?

According to computer science, the former is preferred. Object-oriented programming (OOP) is the paradigm of computer science. Objects correspond thereby to actors, which take over certain tasks and report about their status whereby their internal functionality is not observable from the outside.

The idea of the Blackboxing is not new, it reaches back to the Monads of Leibniz, a baroque exuberant cosmology.

The philosophical current of object-oriented ontology (OOO) has clear relations to the programming paradigm. According to it the world consists of Objects, which coexist on an equal footing, and act with each other. These flat hierarchies level previous differences: People, things and concepts coexist on an equal level.

As a consequence, attention shifts to metonymy, contiguity, dwelling on the same semantic level. Variants and modification are of interest, evident in the concept of classes in OOP, from which objects are instantiated that vary among themselves.

Ian Bogost applies this conception to computer games under the term unit operations. The player avatar, objects, and even the game environment are seen as objects which incorporate certain functions. A variety of game operations can be generated by permuting these objects.

The concept of object orientation can be thought of further. On the object orientation with its discrete identities and differences follows after Alan Shapiro a software development of similarity relations, which would realize what he calls the concept of expanded narration. This then brings the trope metaphor into focus.

According to computer science, the former is preferred. Object-oriented programming (OOP) is the paradigm of computer science. Objects correspond thereby to actors, which take over certain tasks and report about their status whereby their internal functionality is not observable from the outside.

The idea of the Blackboxing is not new, it reaches back to the Monads of Leibniz, a baroque exuberant cosmology.

The philosophical current of object-oriented ontology (OOO) has clear relations to the programming paradigm. According to it the world consists of Objects, which coexist on an equal footing, and act with each other. These flat hierarchies level previous differences: People, things and concepts coexist on an equal level.

As a consequence, attention shifts to metonymy, contiguity, dwelling on the same semantic level. Variants and modification are of interest, evident in the concept of classes in OOP, from which objects are instantiated that vary among themselves.

Ian Bogost applies this conception to computer games under the term unit operations. The player avatar, objects, and even the game environment are seen as objects which incorporate certain functions. A variety of game operations can be generated by permuting these objects.

The concept of object orientation can be thought of further. On the object orientation with its discrete identities and differences follows after Alan Shapiro a software development of similarity relations, which would realize what he calls the concept of expanded narration. This then brings the trope metaphor into focus.

Media dramaturgy - events and sequences in fictional realities are characterized by a certain probability.

Traditional narratives, dramas and films, follow established patterns. One of the most common is the Aristotelian conception of drama, which describes a self-contained arc of tension as well as the probable transitions between the plot phases.

In computer-based narratives, other transitions, progressions and patterns occur. The basis of all these different conceptions are numerical archetypes of a rhythmic counting nature.

With subjective and objective probability, a distinction is made between what happens most of the time and what is generally accepted as plausible. According to Aristotle, it is precisely this credibility that gives the plot of a drama a higher probability of being perceived by the recipient as an imitation of a possible course of action, and thus has an emotionally moving effect on him, since this event could happen to him himself.

The action in the drama should be of a general nature and should represent a meaningful concatenation of events. The criterion meaningful should also apply to the twist in the drama, which produces the sympathy and the later purification from the frightening feelings. It should not occur by chance, but should be subject to the criteria of probability, but at the same moment it should be surprising, that is, contrary to the expectations of the recipients.

The Aristotelian concept can be seen as a theatrical archetype. Archetypes are self-repeating, dynamic patterns of behavioral patterns, which refer to an unmanifest basic form and differ not insignificantly in their expression. The number archetype takes an exposed position in two aspects: It can be seen as a conscious aspect for any form of rational order as well as rhythmic behavior and in its seriality (series of natural numbers) as an narrative sequence which is constitutive for all archetypes, since they manifest themselves in temporally ordered sequences.

Due to its abstractness, all other archetypes are borrowed from the environment in analogy formations and are thus of a depictive nature, it is to be regarded as the basis on which heuristic as well as quantitative descriptions are built upon and can thus generate a complementarity of psychic and extra-psychic realms. The numerical archetypes, which can be seen in the dramatic sequence, in the special mathematical-physical curves (normal distribution, Piosson distribution etc.) as well as in the functions for the mathematical, computer-based generation of randomness (deterministic chaos, attractors) can be seen as constants that formally capture unexpected events as well as incomplete knowledge formally.

Traditional narratives, dramas and films, follow established patterns. One of the most common is the Aristotelian conception of drama, which describes a self-contained arc of tension as well as the probable transitions between the plot phases.

In computer-based narratives, other transitions, progressions and patterns occur. The basis of all these different conceptions are numerical archetypes of a rhythmic counting nature.

With subjective and objective probability, a distinction is made between what happens most of the time and what is generally accepted as plausible. According to Aristotle, it is precisely this credibility that gives the plot of a drama a higher probability of being perceived by the recipient as an imitation of a possible course of action, and thus has an emotionally moving effect on him, since this event could happen to him himself.

The action in the drama should be of a general nature and should represent a meaningful concatenation of events. The criterion meaningful should also apply to the twist in the drama, which produces the sympathy and the later purification from the frightening feelings. It should not occur by chance, but should be subject to the criteria of probability, but at the same moment it should be surprising, that is, contrary to the expectations of the recipients.

The Aristotelian concept can be seen as a theatrical archetype. Archetypes are self-repeating, dynamic patterns of behavioral patterns, which refer to an unmanifest basic form and differ not insignificantly in their expression. The number archetype takes an exposed position in two aspects: It can be seen as a conscious aspect for any form of rational order as well as rhythmic behavior and in its seriality (series of natural numbers) as an narrative sequence which is constitutive for all archetypes, since they manifest themselves in temporally ordered sequences.

Due to its abstractness, all other archetypes are borrowed from the environment in analogy formations and are thus of a depictive nature, it is to be regarded as the basis on which heuristic as well as quantitative descriptions are built upon and can thus generate a complementarity of psychic and extra-psychic realms. The numerical archetypes, which can be seen in the dramatic sequence, in the special mathematical-physical curves (normal distribution, Piosson distribution etc.) as well as in the functions for the mathematical, computer-based generation of randomness (deterministic chaos, attractors) can be seen as constants that formally capture unexpected events as well as incomplete knowledge formally.